586 Mobile Phone Sensors: Assessment and Review

586.1 Learning Objectives

This assessment will test your understanding of:

- Smartphone sensor types and their applications

- Web APIs for sensor access

- Participatory sensing principles

- Privacy protection techniques

- Battery optimization strategies

- Sensor fusion for navigation

586.2 Knowledge Check

Test your understanding with these questions.

Test your knowledge of mobile phone sensing and its applications in IoT systems.

586.3 Comprehensive Review Quiz

586.4 Chapter Summary

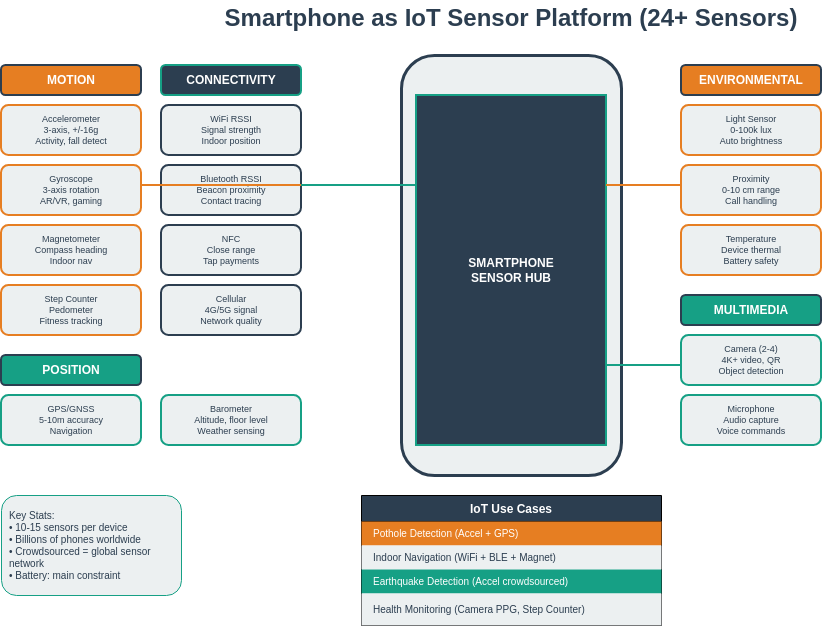

Smartphones are ubiquitous multi-sensor platforms that extend IoT capabilities to billions of users worldwide, offering 10+ sensors (motion, position, environmental, multimedia) combined with powerful processors, always-on connectivity, and rich user interfaces. This unique combination enables participatory sensing applications where volunteer users contribute data for environmental monitoring, traffic analysis, and public health tracking.

Web-based sensing through standardized APIs (Generic Sensor API, Geolocation API, DeviceOrientation, Progressive Web Apps) enables cross-platform sensor access without requiring native app development. These browser-based approaches reduce deployment barriers and enable rapid prototyping of mobile IoT applications. Native frameworks like React Native provide deeper sensor access and better performance for production applications.

Privacy and battery management are critical considerations for mobile sensing applications. Privacy protection techniques include data anonymization, differential privacy, k-anonymity, and informed consent mechanisms. Battery optimization strategies involve adaptive sampling rates, sensor batching, duty cycling, and intelligent use of sensor fusion to reduce redundant measurements while maintaining data quality.

Participatory sensing transforms smartphones into crowdsourced sensor networks for applications ranging from air quality monitoring to traffic flow analysis and noise pollution mapping. The combination of location awareness, user context, and multi-sensor capabilities makes smartphones powerful tools for understanding urban environments and enabling smart city applications.

586.5 Academic Resources

Source: Carnegie Mellon University - Building User-Focused Sensing Systems

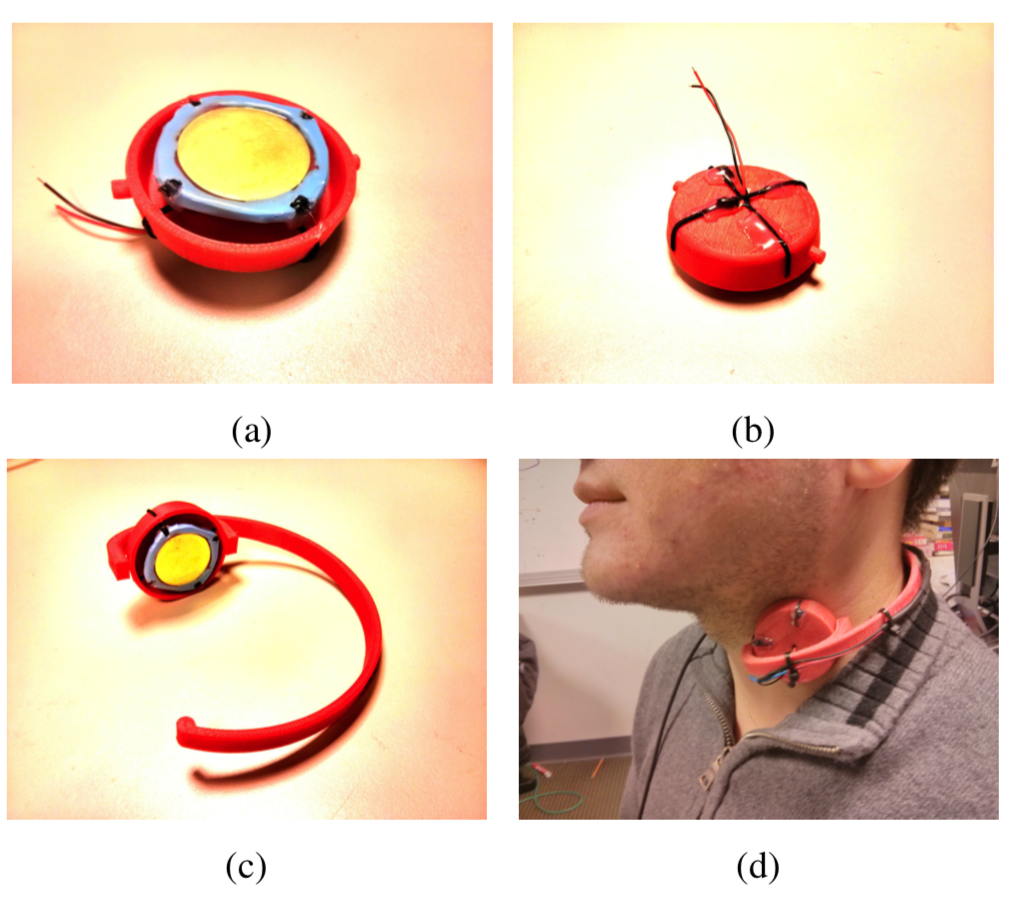

Wearable sensors complement smartphone sensing:

- Form factor: Neck-mounted devices capture throat vibrations, swallowing, and vocalization

- Piezoelectric sensing: Converts mechanical vibration to electrical signal for eating/drinking detection

- Continuous monitoring: Unlike phone sensors, wearables provide always-on physiological data

- Fusion opportunity: Combine with smartphone accelerometer and audio for robust activity recognition

Source: Carnegie Mellon University - Building User-Focused Sensing Systems

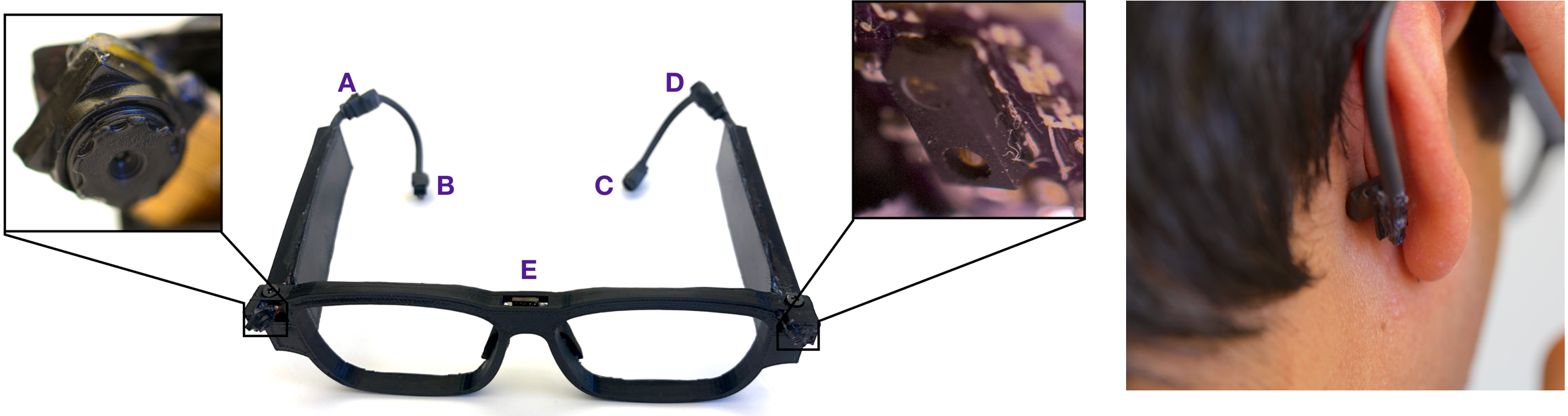

Smart glasses extend mobile sensing capabilities:

- First-person vision: Camera captures what user sees, enabling visual context awareness

- Proximity/gesture: Hand gesture detection near face without touch

- Head motion tracking: IMU measures head orientation and movement patterns

- Bone conduction: Audio feedback without blocking ears, enabling ambient awareness

- Integration with phone: Glasses sensors complement smartphone for richer activity context

586.6 Visual Reference Gallery

Explore alternative visual representations of key mobile sensing concepts. These AI-generated figures offer different perspectives on smartphone sensor architectures and applications.

AI-generated modern visualization emphasizing sensor categorization and data flow architecture.

AI-generated geometric visualization highlighting the MEMS sensor structure used in smartphone motion sensing.

DrawIO template showing the complete smartphone sensor stack from hardware to applications.

586.7 Visual Reference Library

This section contains AI-generated phantom figures designed to illustrate key concepts covered in this chapter. These figures provide visual reference material for understanding sensing and actuation systems.

Note: These are AI-generated educational illustrations meant to complement the technical content.

586.7.1 Skin and Tactile Sensing

586.7.2 Academic Research Illustrations

586.7.3 Sensing Fundamentals

586.8 What’s Next

Now that you understand sensors and actuators in both dedicated IoT devices and smartphones, you’re ready to dive into the electrical foundations that power these systems. The next section covers fundamental electricity concepts essential for understanding power requirements, circuits, and energy management in IoT deployments.

Phone Sensor Technologies: - Location Awareness - GPS and positioning - Bluetooth - BLE beacons - NFC - Near-field communication

Product Examples Using Phone Sensors: - Fitbit - Phone app integration

User Experience: - Interface Design - App interfaces - UX Design - Mobile UX patterns

586.9 Resources

586.9.1 Web APIs

586.9.2 Mobile Frameworks

586.9.3 Research Papers

- “Mobile Phone Sensing Systems: A Survey” (IEEE Communications Surveys)

- “Participatory Sensing: Applications and Architecture” (IEEE Internet Computing)

- “Privacy in Mobile Sensing” (IEEE Pervasive Computing)