%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#2C3E50', 'primaryTextColor': '#fff', 'primaryBorderColor': '#16A085', 'lineColor': '#E67E22', 'secondaryColor': '#16A085', 'tertiaryColor': '#E67E22', 'fontSize': '12px'}}}%%

flowchart TB

GPS[GPS Location Traces<br/>15 months, 1.5M users] --> CLUSTER[Temporal Clustering]

CLUSTER --> HOME[Home Location<br/>Nighttime stays<br/>10 PM - 6 AM]

CLUSTER --> WORK[Work Location<br/>Weekday stays<br/>9 AM - 5 PM]

HOME --> CENSUS1[Census Block 1<br/>~2000 people]

WORK --> CENSUS2[Census Block 2<br/>~2000 people]

CENSUS1 --> INTERSECT[Intersection:<br/>Home x Work]

CENSUS2 --> INTERSECT

INTERSECT --> UNIQUE[Anonymity Set = 1<br/>UNIQUELY IDENTIFIED]

style GPS fill:#16A085,stroke:#0e6655,color:#fff

style HOME fill:#2C3E50,stroke:#16A085,color:#fff

style WORK fill:#2C3E50,stroke:#16A085,color:#fff

style UNIQUE fill:#c0392b,stroke:#a93226,color:#fff

1467 Location Privacy Leaks

1467.1 Learning Objectives

By the end of this chapter, you will be able to:

- Assess Location Data Sensitivity: Understand what location traces reveal about individuals

- Explain De-anonymization Attacks: Describe how attackers re-identify users from “anonymized” location data

- Calculate Anonymity Sets: Determine how many spatiotemporal points uniquely identify users

- Apply Location Privacy Defenses: Implement techniques to reduce location tracking exposure

1467.2 Prerequisites

Before diving into this chapter, you should be familiar with:

- Mobile Data Collection and Permissions: Understanding mobile data types and collection methods

- Privacy Leak Detection: Data flow analysis and taint tracking concepts

Quizzes Hub: Test your understanding of de-anonymization attacks with interactive quizzes. Focus on calculating anonymity set sizes from location traces.

Knowledge Gaps Tracker: Common confusion points include thinking anonymization protects location data (4 spatiotemporal points uniquely identify 95% of users). Document your gaps here for targeted review.

1467.3 Introduction

Location data is extremely sensitive and can reveal intimate details about an individual’s life. Even when “anonymized,” location traces remain highly identifiable due to their unique spatiotemporal patterns. This chapter examines why location privacy is fundamentally different from other data types.

1467.4 What Location Data Reveals

Location data can reveal: - Home and work addresses - Daily routines and habits - Social relationships (who you meet, where) - Health conditions (hospital visits, pharmacy) - Religious beliefs (place of worship attendance) - Political affiliations (rally attendance, campaign offices)

1467.5 De-anonymization Using Location Data

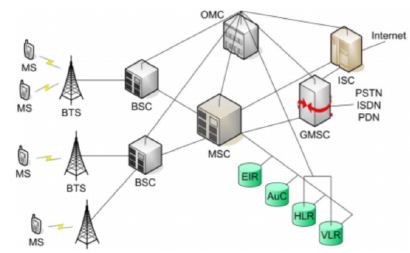

Research Finding: Knowing a user’s home and work location at census block granularity reduces anonymity set to median size of 1 in US working population.

1467.5.1 Location Inference Features

Attackers use these features to infer home and work locations: - Last destination of day (likely home) - Long stay locations - Time patterns (work hours vs. home hours) - Movement speed (walking, driving, public transit)

1467.6 Quantifying De-anonymization Risk

Key Research Findings:

| Data Points | Unique Identification Rate |

|---|---|

| 4 spatiotemporal points | 95% of individuals |

| Home + work location | Median anonymity set = 1 |

| 8 movie ratings | 99% of Netflix users |

Why Location is Worse Than Ratings:

- Data sparsity: Infinite location possibilities (continuous GPS coordinates) vs. discrete choices (5 star ratings)

- Temporal correlations: Sequential activities create unique patterns—(gym then coffee then office at 7am) is your fingerprint

- Auxiliary information attacks: Public data enables re-identification via voter registration, property records, social media check-ins

Research on 1.5 million mobile users over 15 months proves that 4 spatiotemporal points uniquely identify 95% of individuals. This means any “anonymized” location dataset with moderate temporal resolution is effectively an identity database.

1467.7 K-Anonymity Requirements for Mobility

K-anonymity means ensuring each record is indistinguishable from at least K-1 other records.

Different data types require vastly different K values:

| Data Type | Required K | Why |

|---|---|---|

| Movie ratings | K >= 5 | Discrete choices, limited correlations |

| Mobility traces | K >= 5,000 | Continuous space, strong temporal correlations |

Why mobility requires 1,000x more anonymity: 1. Continuous space: GPS has infinite precision vs. 5 star levels 2. Stronger correlations: Sequential dependencies (gym then shower then breakfast is distinct from breakfast then gym) 3. Higher temporal resolution: Second-level timestamps vs. approximate dates 4. Multiple dimensions: Location + time + activity + network simultaneously

1467.8 Differential Privacy Limitations

1467.9 Location Privacy Attack Example

1467.10 Suspicious Location Access Patterns

1467.11 Location Privacy Defenses

Effective Defenses:

- Limit collection: Use “Only while using” permission when possible

- Coarsen granularity: City-level location for weather apps

- Temporal obfuscation: Delay location-based features by hours

- Dummy locations: Mix real locations with synthetic ones

- Local processing: Perform location-based computations on-device

Ineffective Defenses:

- Simple anonymization: Removing names/IDs insufficient

- Adding noise to individual points: Trajectory reconstruction attacks succeed

- Hashing location data: Small space enables rainbow tables

- K-anonymity with K less than 5000: Insufficient for mobility data

1467.12 Summary

Location data poses unique privacy challenges:

What Location Reveals: - Home, work, and frequently visited locations - Social relationships, health conditions, beliefs - Daily routines and behavioral patterns

De-anonymization Risks: - 4 spatiotemporal points identify 95% of individuals - Home + work location = unique identifier - K-anonymity requires K >= 5,000 for mobility (1,000x more than ratings)

Why Anonymization Fails: - Continuous space (infinite GPS precision) - Strong temporal correlations - Auxiliary information attacks - Map constraints enable trajectory reconstruction

Key Takeaway: Location data is inherently identifiable. Privacy protection requires preventing collection, not trusting post-hoc anonymization.

1467.13 What’s Next

With location privacy risks understood, the next chapter explores Wi-Fi and Sensing Privacy where you’ll learn how Wi-Fi probe requests and motion sensors create additional tracking vectors, and how mobile sensing data enables de-anonymization through behavioral fingerprinting.

Continue to Wi-Fi and Sensing Privacy →