%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#2C3E50', 'primaryTextColor': '#fff', 'primaryBorderColor': '#16A085', 'lineColor': '#16A085', 'secondaryColor': '#E67E22', 'tertiaryColor': '#ecf0f1', 'background': '#ffffff', 'mainBkg': '#2C3E50', 'secondBkg': '#16A085', 'tertiaryBkg': '#E67E22'}}}%%

graph LR

A[50B IoT Devices<br/>Worldwide] --> B[Continuous Sensing<br/>24/7/365]

B --> C[Multiple Sensors<br/>per Device]

C --> D[High Sampling Rates<br/>10-1000 Hz]

D --> E[Rich Data Types<br/>Video, Audio, Text]

E --> F[MASSIVE DATA VOLUME<br/>79.4 ZB by 2025]

style A fill:#2C3E50,stroke:#16A085,color:#fff

style B fill:#16A085,stroke:#2C3E50,color:#fff

style C fill:#2C3E50,stroke:#16A085,color:#fff

style D fill:#16A085,stroke:#2C3E50,color:#fff

style E fill:#2C3E50,stroke:#16A085,color:#fff

style F fill:#E67E22,stroke:#2C3E50,color:#fff

1259 Big Data Fundamentals

Learning Objectives

After completing this chapter, you will be able to:

- Understand the characteristics of big data in IoT contexts

- Identify the 5 V’s of big data (Volume, Velocity, Variety, Veracity, Value)

- Analyze why traditional databases cannot handle IoT scale

- Calculate the economics of distributed versus centralized processing

1259.1 Getting Started (For Beginners)

Big Data is like having a SUPER memory that never forgets anything!

1259.1.1 The Sensor Squad Adventure: The Mountain of Messages

One sunny morning, Signal Sam woke up to find something amazing - and a little scary! During the night, all the sensors in the smart house had been sending messages, and now there was a HUGE pile of data sitting in Signal Sam’s inbox.

“Whoa!” gasped Sam, looking at the mountain of messages. “Sunny sent 86,400 light readings yesterday. Thermo sent 86,400 temperature readings. Motion Mo detected movement 2,347 times. And Power Pete tracked every single watt of electricity - that’s over 300,000 data points!”

Sunny the Light Sensor flew over. “Is that… too much data?”

“Not if we’re smart about it!” Sam grinned. “This is what grown-ups call Big Data. Let me teach you the 5 V’s - they’re like our five superpowers for handling all this information!”

V #1 - Volume (The GIANT pile): “See this mountain? That’s Volume - how MUCH data we have. It’s like trying to count every grain of sand on a beach!”

V #2 - Velocity (The SPEED demon): Motion Mo zoomed by. “That’s me! I send 10 readings every SECOND. Velocity means how FAST new data arrives - like trying to drink from a fire hose!”

V #3 - Variety (The MIX master): “Look at all the different types!” said Thermo. “Numbers from me, pictures from cameras, sounds from microphones. Variety means different KINDS of data mixed together.”

V #4 - Veracity (The TRUTH teller): Power Pete spoke up seriously. “Sometimes sensors make mistakes. Veracity means checking if the data is TRUE and ACCURATE.”

V #5 - Value (The TREASURE finder): “And the best part,” Sam announced, “is finding the gold nuggets in all this data! Value means turning mountains of numbers into useful answers - like knowing exactly when to water the plants or when someone might slip on the stairs.”

The Sensor Squad cheered! They had learned that Big Data isn’t scary - it’s just lots of little pieces of information that tell an amazing story when you put them together!

1259.1.2 Key Words for Kids

| Word | What It Means |

|---|---|

| Big Data | So much information that regular computers can’t handle it - like trying to fit an ocean in a bathtub! |

| Volume | How MUCH data there is - measured in terabytes (that’s a TRILLION bytes!) |

| Velocity | How FAST data is coming in - some sensors send thousands of readings per second |

| Variety | Different TYPES of data mixed together - numbers, pictures, sounds, and more |

| Veracity | Making sure the data is TRUE and correct - like double-checking your homework |

| Value | The useful ANSWERS we find hidden in all that data - the treasure! |

1259.1.3 Try This at Home!

Be a Data Detective for One Day:

- Pick ONE thing to track for a whole day (like how many times you open the fridge)

- Write down the TIME and what happened each time (8:00am - got milk, 12:30pm - got cheese…)

- At the end of the day, count your entries. That’s your Volume!

- Look at how often you made entries. That’s your Velocity!

- Think about patterns: “I open the fridge most around mealtimes!” That’s finding Value!

Now imagine if EVERYTHING in your house was keeping track like you did - the lights, the doors, the TV, the thermostat. THAT’S why IoT creates Big Data!

Challenge: Can you calculate how many data points your house might create in one day if every device sent just ONE message per minute? (Hint: 1 device x 60 minutes x 24 hours = 1,440. Now multiply by how many devices you have!)

1259.1.4 What is Big Data? (Simple Explanation)

The Refrigerator Analogy

Imagine your refrigerator could talk. Every second, it tells you: - Current temperature: 37.2 degrees F - Door status: Closed - Compressor: Running - Ice maker: Full

That’s 4 pieces of information per second, or 345,600 data points per day - just from your fridge!

Now imagine EVERY appliance in your home doing this: - Refrigerator: 345,600/day - Thermostat: 86,400/day - Smart lights (10): 864,000/day - Security cameras (4): 10 TB/day of video

A single smart home generates more data in one day than a traditional business did in an entire year in 1990.

That’s why we need “big data” technology - not because we want to, but because traditional tools literally cannot keep up.

Analogy: Think of big data like trying to drink from a fire hose - too much data for normal tools to handle.

Regular data is like a garden hose–manageable, you can store it in a bucket, one person can control it. Big data is like a fire hose–massive volume, coming incredibly fast, and you need special equipment (big data systems) and a whole team to handle it safely!

Why IoT creates Big Data: - 50 billion IoT devices worldwide (2025) - Each device sends 100+ readings per day - 50 billion x 100 = 5 trillion data points every single day - That’s like every person on Earth posting 600 social media updates per day!

1259.1.5 The 5 V’s of Big Data (Made Simple)

| V | Meaning | IoT Example |

|---|---|---|

| Volume | HOW MUCH data | 50 billion devices x 100 readings/day = HUGE |

| Velocity | HOW FAST data arrives | Self-driving car: 1 GB per SECOND |

| Variety | HOW MANY types | Temp, video, GPS, audio, text–all different formats |

| Veracity | HOW ACCURATE | Is that sensor reading correct or is it broken? |

| Value | WHAT IT’S WORTH | Can we actually use this data to make decisions? |

1259.1.6 Why Does IoT Create So Much Data?

IoT Data Explosion Factors: Five multiplying factors create exponential data growth from billions of constantly-sensing devices to zettabytes of annual data generation.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#2C3E50', 'primaryTextColor': '#fff', 'primaryBorderColor': '#16A085', 'lineColor': '#16A085', 'secondaryColor': '#E67E22', 'tertiaryColor': '#ecf0f1'}}}%%

flowchart TB

subgraph SmartCity["Smart City Traffic"]

SC1[Volume: HIGH<br/>10K cameras]

SC2[Velocity: VERY HIGH<br/>Real-time feeds]

SC3[Variety: HIGH<br/>Video + sensors]

SC4[Veracity: MEDIUM<br/>Weather affects]

SC5[Value: HIGH<br/>Safety critical]

end

subgraph Factory["Smart Factory"]

F1[Volume: MEDIUM<br/>1K sensors]

F2[Velocity: HIGH<br/>1000 Hz sampling]

F3[Variety: LOW<br/>Numeric sensors]

F4[Veracity: VERY HIGH<br/>Calibrated]

F5[Value: VERY HIGH<br/>Downtime costly]

end

subgraph Vehicle["Connected Vehicle"]

V1[Volume: EXTREME<br/>4 TB/day]

V2[Velocity: EXTREME<br/>Real-time safety]

V3[Variety: HIGH<br/>Camera+LIDAR+GPS]

V4[Veracity: HIGH<br/>Safety-grade]

V5[Value: CRITICAL<br/>Life safety]

end

subgraph Agri["Agricultural IoT"]

A1[Volume: LOW<br/>100 sensors]

A2[Velocity: LOW<br/>1 reading/hour]

A3[Variety: MEDIUM<br/>Soil+weather]

A4[Veracity: MEDIUM<br/>Outdoor noise]

A5[Value: HIGH<br/>Crop yield]

end

style SmartCity fill:#2C3E50,stroke:#16A085,color:#fff

style Factory fill:#16A085,stroke:#2C3E50,color:#fff

style Vehicle fill:#E67E22,stroke:#2C3E50,color:#fff

style Agri fill:#27AE60,stroke:#2C3E50,color:#fff

In one sentence: Big data for IoT is not about storing everything - it is about building distributed systems that can process continuous streams faster than data arrives.

Remember this: When IoT data overwhelms your system, scale horizontally (add more nodes) rather than vertically (bigger server) - a single server hits a ceiling, but distributed systems scale indefinitely.

1259.1.7 The Problem: Can’t Use Regular Databases!

| Challenge | Regular Database | Big Data System |

|---|---|---|

| Data Size | GB (gigabytes) | PB (petabytes = 1M GB) |

| Processing | One computer | Thousands of computers |

| Speed | Minutes to query | Seconds to query |

| Data Types | Tables only | Tables, images, video, JSON |

| Cost | Expensive per GB | Cheap at scale |

1259.2 Why Traditional Databases Can’t Handle IoT

Think about how a traditional database works - it’s like a librarian organizing books. One librarian can shelve about 100 books per hour. What happens when trucks start delivering 10,000 books per hour?

1259.2.1 The Single Server Problem

A typical database server can process about 10,000-50,000 simple operations per second. That sounds like a lot, until you do the math for IoT:

| Scenario | Data Generation Rate | Traditional DB Capacity | Gap |

|---|---|---|---|

| Smart home (20 sensors) | 20 readings/second | Easily handled | None |

| Small factory (500 sensors) | 5,000 readings/second | Near limit | Small |

| Smart city (100,000 sensors) | 1,000,000 readings/second | 20x over capacity | Massive |

Real Numbers from Production Systems:

- MySQL (single server): ~5,000 inserts/second sustained

- PostgreSQL (single server): ~10,000 inserts/second sustained

- Oracle (high-end server): ~50,000 inserts/second sustained

- IoT Smart City: 1,000,000+ sensor readings/second required

Even the most expensive database server hits a physical limit - one CPU can only process so many operations per second, and one hard drive can only write so fast.

1259.2.2 The Bottleneck Visualized

Imagine a single door to a stadium. Even if 50,000 people need to enter, only one person can go through at a time. Traditional databases have this same bottleneck - all data must pass through one processing point.

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#2C3E50', 'primaryTextColor': '#fff', 'primaryBorderColor': '#16A085', 'lineColor': '#16A085', 'secondaryColor': '#E67E22', 'tertiaryColor': '#ecf0f1'}}}%%

flowchart TB

subgraph Traditional["Traditional Database - Single Bottleneck"]

T1[100,000 Sensors<br/>1M readings/sec] --> T2[Single Server<br/>10K ops/sec MAX]

T2 --> T3[BOTTLENECK<br/>99% data lost]

T3 --> T4[Database<br/>Only 1% stored]

end

subgraph BigData["Big Data - Distributed Processing"]

B1[100,000 Sensors<br/>1M readings/sec] --> B2[Load Balancer<br/>Distributes work]

B2 --> B3[Server 1<br/>10K ops/sec]

B2 --> B4[Server 2<br/>10K ops/sec]

B2 --> B5[... 100 servers ...<br/>10K each]

B2 --> B6[Server 100<br/>10K ops/sec]

B3 & B4 & B5 & B6 --> B7[Distributed Storage<br/>1M ops/sec TOTAL]

end

style T1 fill:#2C3E50,stroke:#16A085,color:#fff

style T2 fill:#E67E22,stroke:#2C3E50,color:#fff

style T3 fill:#E74C3C,stroke:#2C3E50,color:#fff

style T4 fill:#E74C3C,stroke:#2C3E50,color:#fff

style B1 fill:#2C3E50,stroke:#16A085,color:#fff

style B2 fill:#16A085,stroke:#2C3E50,color:#fff

style B3 fill:#2C3E50,stroke:#16A085,color:#fff

style B4 fill:#2C3E50,stroke:#16A085,color:#fff

style B5 fill:#7F8C8D,stroke:#2C3E50,color:#fff

style B6 fill:#2C3E50,stroke:#16A085,color:#fff

style B7 fill:#27AE60,stroke:#2C3E50,color:#fff

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#2C3E50', 'primaryTextColor': '#fff', 'primaryBorderColor': '#16A085', 'lineColor': '#16A085', 'secondaryColor': '#E67E22', 'tertiaryColor': '#ecf0f1'}}}%%

flowchart TB

subgraph Vertical["Vertical Scaling (Scale Up)"]

direction TB

V1[Small Server<br/>4 CPU, 16GB RAM<br/>$500] --> V2[Medium Server<br/>16 CPU, 64GB RAM<br/>$2,000]

V2 --> V3[Large Server<br/>64 CPU, 256GB RAM<br/>$15,000]

V3 --> V4[Maximum Server<br/>128 CPU, 1TB RAM<br/>$100,000]

V4 --> V5[CEILING HIT<br/>Cannot scale further]

end

subgraph Horizontal["Horizontal Scaling (Scale Out)"]

direction TB

H1[1 Node<br/>10K ops/sec] --> H2[10 Nodes<br/>100K ops/sec]

H2 --> H3[100 Nodes<br/>1M ops/sec]

H3 --> H4[1000 Nodes<br/>10M ops/sec]

H4 --> H5[NO CEILING<br/>Add more nodes as needed]

end

subgraph Cost["Cost Efficiency at Scale"]

C1[10K ops/sec<br/>Vertical: $500<br/>Horizontal: $500]

C2[100K ops/sec<br/>Vertical: $15,000<br/>Horizontal: $5,000]

C3[1M ops/sec<br/>Vertical: IMPOSSIBLE<br/>Horizontal: $50,000]

end

style V5 fill:#E74C3C,stroke:#2C3E50,color:#fff

style H5 fill:#27AE60,stroke:#2C3E50,color:#fff

style Vertical fill:#E67E22,color:#fff

style Horizontal fill:#16A085,color:#fff

style Cost fill:#2C3E50,color:#fff

Single Server Bottleneck vs Distributed Processing: Traditional databases force all data through one processing point creating a bottleneck that loses 99% of IoT data; distributed big data systems spread the load across 100+ servers to handle full data volume.

1259.2.3 Why Can’t We Just Buy a Bigger Server?

The Law of Diminishing Returns:

| Server Upgrade | Cost | Performance Gain | Cost Per Operation |

|---|---|---|---|

| Basic server ($1,000) | $1,000 | 1,000 ops/sec (baseline) | $1.00 |

| Mid-tier server ($10,000) | $10,000 | 5,000 ops/sec (5x faster) | $2.00 (worse!) |

| High-end server ($100,000) | $100,000 | 20,000 ops/sec (20x faster) | $5.00 (much worse!) |

| 100 basic servers | $100,000 | 100,000 ops/sec (100x faster) | $1.00 (same as baseline!) |

The Physics Problem: - Single server speed is limited by the speed of light (signals travel ~1 foot per nanosecond) - Larger servers with more RAM and CPUs hit coordination overhead - Hard drives have maximum rotation speeds (7,200-15,000 RPM) - Network cards max out at 10-100 Gbps

You can’t just “buy faster” - you hit physical limits. The solution is horizontal scaling (many cheap servers) not vertical scaling (one expensive server).

1259.3 The Economics of Scale

This seems backwards - more data should cost more, right? Here’s why it doesn’t:

1259.3.1 Traditional Database Costs

Example: Processing 1 Million Sensor Readings Per Second

| Component | Cost | Capacity | Total Cost |

|---|---|---|---|

| High-performance server | $50,000 | 50,000 ops/sec | $50,000 |

| Need 20 servers to handle load | x20 | 1M ops/sec | $1,000,000 |

| Oracle Enterprise licenses | $47,500/CPU x 4 CPUs x 20 servers | - | $3,800,000 |

| 5-year total cost | Maintenance $200K/year | - | $5,800,000 |

Cost per million operations: $5.80

1259.3.2 Big Data Approach Costs

Same Workload: 1 Million Sensor Readings Per Second

| Component | Cost | Capacity | Total Cost |

|---|---|---|---|

| 100 commodity servers | $500 each | 10,000 ops/sec each | $50,000 |

| Open-source software | Free (Hadoop, Cassandra) | 1M ops/sec total | $0 |

| 5-year total cost | Maintenance $10K/year | - | $100,000 |

Cost per million operations: $0.10

Cost reduction: 58x cheaper!

1259.3.3 The Cloud Multiplier

Cloud providers like AWS, Google, and Azure buy millions of servers. Their cost per server is much lower than buying one yourself. When you use their big data services, you benefit from:

- Volume discounts on hardware: They pay $200 per server (vs your $500)

- Shared infrastructure costs: One admin manages 10,000 servers (vs your 1 admin per 10 servers)

- Pay only for what you use: Process 1M readings/sec during peak hours, 10K/sec at night (pay for average, not peak)

Real Example: Smart City Traffic Analysis

| Approach | Upfront Cost | Annual Cost | 10-Year Total |

|---|---|---|---|

| Traditional DB | $500,000 (servers + licenses) | $100,000/year (maintenance) | $1,500,000 |

| Self-hosted Big Data | $50,000 (servers) | $20,000/year (maintenance) | $250,000 |

| Cloud Big Data (AWS EMR) | $0 (no upfront) | $50,000/year (pay-per-use) | $500,000 |

Key Insight: Cloud is 3x cheaper than traditional databases, even though it “looks” more expensive per hour ($10/hour sounds expensive, but you only run it when needed).

1259.4 The Four Vs of Big Data in IoT

1259.4.1 Volume: The Scale Challenge

| IoT Domain | Data Generated | Storage Challenge |

|---|---|---|

| Smart city (1M sensors) | ~1 PB/year | Need distributed storage |

| Autonomous vehicle | ~1 TB/day per car | Edge processing essential |

| Industrial IoT factory | ~1 GB/hour per line | Time-series databases |

| SKA Telescope | 12 Exabytes/year | More than entire internet! |

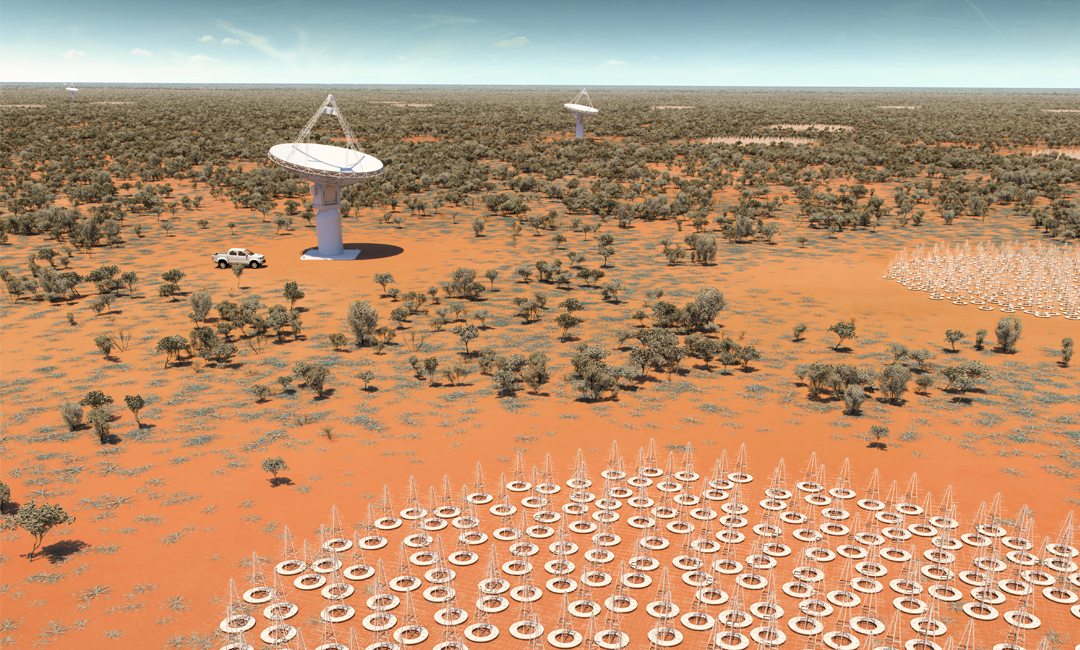

1259.4.2 Case Study: Square Kilometre Array (SKA)

The SKA is the world’s largest IoT project:

| Metric | Value | Comparison |

|---|---|---|

| Antennas | 130,000+ | More sensors than most smart cities |

| Raw data rate | 12 Exabytes/year | 10x daily internet traffic |

| After processing | 300 PB/year | Still massive |

| Network bandwidth | 100 Gbps | Equivalent to 1M home connections |

Key insight: Even with extreme compression and filtering, SKA generates 300 PB/year. IoT data volume grows faster than storage capacity!

1259.4.3 Velocity: Real-Time Requirements

| Application | Data Rate | Latency Requirement |

|---|---|---|

| Smart meter | 1 reading/15 min | Minutes OK |

| Traffic sensor | 10 readings/sec | Seconds |

| Industrial vibration | 10,000 samples/sec | Milliseconds |

| Autonomous vehicle | 1 GB/sec | <100ms or crash |

1259.4.4 Variety: Heterogeneous Data

IoT generates diverse data types that must be integrated:

- Structured: Sensor readings (temperature: 22.5 degrees C)

- Semi-structured: JSON logs, MQTT messages

- Unstructured: Camera images, audio streams

- Time-series: Continuous sensor streams

- Geospatial: GPS coordinates, location data

1259.4.5 Veracity: Data Quality Issues

| Issue | IoT Example | Mitigation |

|---|---|---|

| Sensor drift | Temperature offset over time | Regular calibration |

| Missing data | Network packet loss | Interpolation, redundancy |

| Outliers | Spike from electrical noise | Statistical filtering |

| Duplicates | Retry on timeout | Deduplication at ingestion |

%%{init: {'theme': 'base', 'themeVariables': { 'primaryColor': '#2C3E50', 'primaryTextColor': '#fff', 'primaryBorderColor': '#16A085', 'lineColor': '#16A085', 'secondaryColor': '#E67E22', 'tertiaryColor': '#ecf0f1'}}}%%

graph TB

subgraph FiveVs["The 5 V's of Big Data"]

V1[VOLUME<br/>Petabytes to Zettabytes<br/>50B devices x data/sec]

V2[VELOCITY<br/>Real-time Streaming<br/>1000s events/sec]

V3[VARIETY<br/>Structured, Semi, Unstructured<br/>Sensors, Video, Logs, JSON]

V4[VERACITY<br/>Data Quality and Trust<br/>Noise, Missing, Outliers]

V5[VALUE<br/>Actionable Insights<br/>Business Decisions]

end

V1 --> Processing[Big Data<br/>Processing]

V2 --> Processing

V3 --> Processing

V4 --> Processing

Processing --> V5

style V1 fill:#2C3E50,stroke:#16A085,color:#fff

style V2 fill:#16A085,stroke:#2C3E50,color:#fff

style V3 fill:#2C3E50,stroke:#16A085,color:#fff

style V4 fill:#16A085,stroke:#2C3E50,color:#fff

style V5 fill:#E67E22,stroke:#2C3E50,color:#fff

style Processing fill:#7F8C8D,stroke:#2C3E50,color:#fff

The 5 V’s Framework: Volume, Velocity, Variety, and Veracity characteristics of IoT data must be processed effectively to extract Value through big data technologies.

1259.5 Self-Check Questions

Before continuing, make sure you understand:

- What makes data “big”? (Answer: Volume, Velocity, Variety–too much, too fast, too varied for normal databases)

- Why can’t we just use a bigger regular database? (Answer: Even the biggest single computer can’t handle petabytes and real-time streaming)

- What’s the most important ‘V’ for IoT? (Answer: Velocity–IoT data arrives constantly and needs real-time processing)

1259.6 Summary

- Big data in IoT is characterized by the 5 V’s: Volume (massive scale from billions of devices), Velocity (high-speed streaming data), Variety (structured, semi-structured, and unstructured formats), Veracity (data quality and trustworthiness), and Value (extracting actionable insights).

- Traditional databases fail at IoT scale because single servers have physical limits–a PostgreSQL server maxes out at ~10,000 writes/second while smart cities need 1,000,000+ writes/second.

- Horizontal scaling beats vertical scaling at cost and capability: 100 commodity servers cost $50,000 and handle 1M ops/sec, while equivalent vertical scaling would cost $1M+ and still hit physical limits.

- Cloud big data services provide 3-10x cost reduction over self-hosted options through volume discounts, shared infrastructure, and pay-per-use pricing models.

1259.7 What’s Next

Now that you understand why big data technologies are necessary, continue to:

- Edge Processing for Big Data - Learn the 90/10 rule that reduces data volume by 99%

- Big Data Technologies - Explore specific technologies like Hadoop, Spark, and Kafka

- Big Data Overview - Return to the chapter index